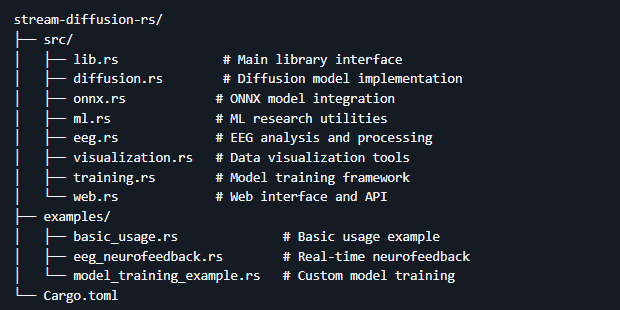

Another important open source app in our ecosystem that I'm building, very much in alpha and the training module still has errors but rest is working https://github.com/compiling-org/stream-diffusion-rs

High-performance multimodal AI and ML research framework in Rust - A comprehensive toolkit for diffusion models, EEG analysis, multisensorial processing, and real-time neurofeedback systems.

🌟 Vision: Multimodal & Multisensorial AI

Combining StreamDiffusion with multimodal and multisensorial AI describes an emerging field of high-speed, interactive generative AI that can process and create content using multiple senses at once. While StreamDiffusion focuses on the real-time processing of images and video, combining it with multimodal inputs (e.g., text, images, audio) and multisensorial AI (tactile, thermal, EEG, biometric) creates more contextually rich and responsive AI systems.

Stream Diffusion RS extends this vision by providing:

Multimodal Fusion: Text, image, audio, and biometric data integration

Multisensorial Processing: EEG, tactile, thermal, and physiological signals

Real-time Streaming: Sub-10ms latency across multiple data dimensions

Neuro-Emotive AI: Brain-computer interfaces with emotional intelligence

Cross-Modal Generation: Converting between different sensory modalities

Rust License: MIT

🚀 Features

🌈 Multimodal AI Core

Cross-Modal Fusion: Text, image, audio, and biometric data integration

Multisensorial Processing: EEG, tactile, thermal, physiological signal analysis

Real-time Streaming: Sub-10ms latency across multiple sensory dimensions

Neuro-Emotive Intelligence: Brain-computer interfaces with emotional context

🤖 Diffusion Models

UNet Architecture: Complete implementation with attention blocks, resnet blocks, and time embeddings

DDIM Scheduler: Advanced denoising diffusion implicit models for fast inference

Text-to-Image: CLIP text encoding integration

Streaming Support: Real-time image generation with progress callbacks

Multimodal Generation: EEG-to-visual, audio-to-image cross-modal synthesis

🔄 ONNX Integration

Model Conversion: PyTorch, TensorFlow, JAX, and HuggingFace model support

ONNX Runtime: High-performance inference with hardware acceleration

Model Registry: Management system for multiple models

Burn Compatibility: Seamless integration with Burn tensor operations

🧠 EEG & Neuroscience

Signal Processing: Filtering, frequency analysis, artifact removal

Brain Wave Analysis: Alpha, Beta, Theta, Delta, Gamma band extraction

Real-time Processing: Circular buffers for streaming EEG data

Neurofeedback: Real-time brain state monitoring and feedback

Multisensorial Fusion: EEG + biometric + environmental data integration

Cross-Modal Translation: Brain waves to visual/audio/artistic expressions

📊 ML Research Tools

Data Loading: CSV support, batching, shuffling, cross-validation

Preprocessing: Normalization, augmentation, feature scaling

Metrics: MSE, MAE, accuracy, IoU, F1-score, AUC-ROC

Experiment Tracking: Parameter logging, artifact storage, dashboard

🎨 Visualization

Plotting Engine: Line plots, scatter plots, histograms, confusion matrices

EEG Visualizations: Signal plots, topographic maps, spectrograms

Training Curves: Loss and accuracy monitoring over epochs

Real-time Dashboard: Live experiment monitoring

🎓 Training Framework

Multiple Optimizers: SGD, Adam, RMSProp with configurable parameters

Loss Functions: MSE, Cross-Entropy, Binary Cross-Entropy, Huber loss

Model Interface: Trainable trait with forward/backward passes

Checkpointing: Automatic model saving and loading

🌐 Web Interface

Gradio-like UI: Modern tabbed interface with JavaScript

REST API: Full API for image generation, EEG analysis, training

Interactive Features: Real-time plotting, model management

File Upload: Support for EEG data and model files

Discussion (0)